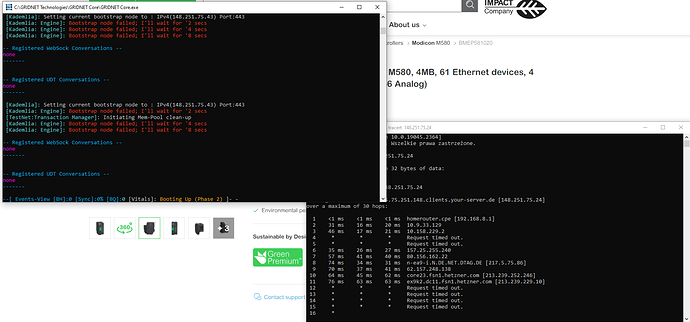

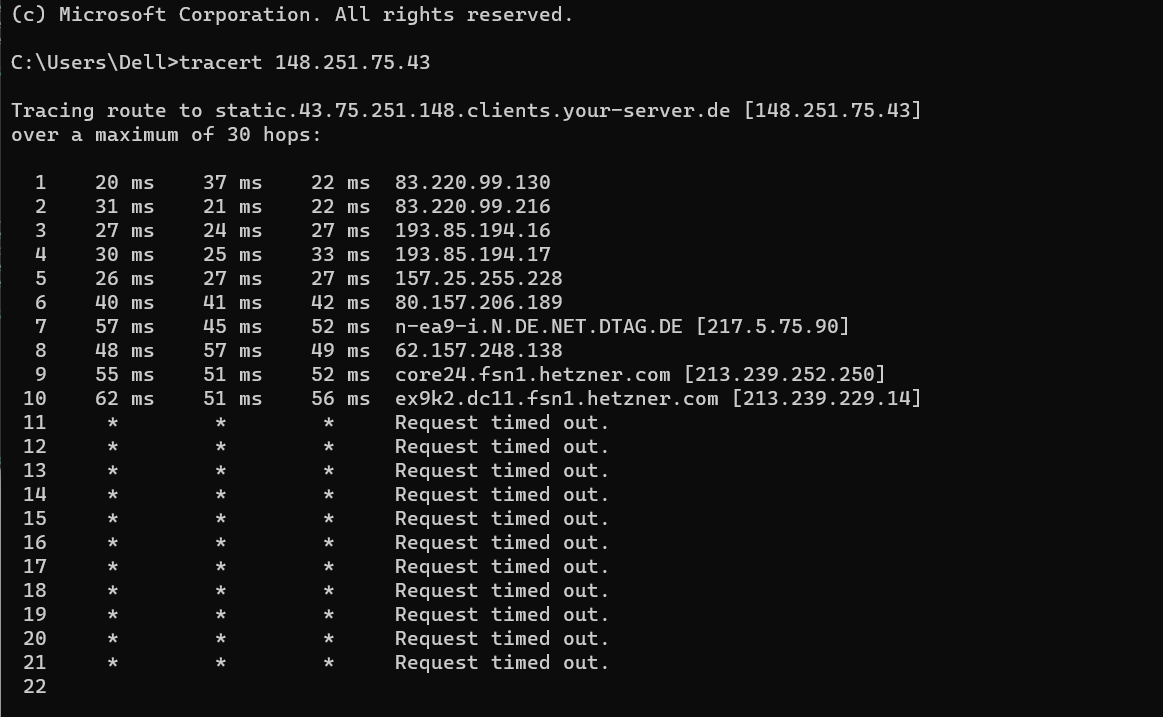

So I’ve been fighting with my PCs and CORE for a while now, I have disabled some very weird stuff that lingered on port 80 in fresh Win10 install and I almost got it to run ![]() My issue is shown on the screencap. While the Node itself starts up fine, it appears that it gets choked by some NAT crap that my ISP is pulling (you can see it in tracert). It gets blocked twice (30 secs each) before going through the third time. I can’t seem to be able to get past it, any ideas?

My issue is shown on the screencap. While the Node itself starts up fine, it appears that it gets choked by some NAT crap that my ISP is pulling (you can see it in tracert). It gets blocked twice (30 secs each) before going through the third time. I can’t seem to be able to get past it, any ideas?

Hello there Alpacalypse!

So glad to be hearing from you, let me take a look…

Even though it would be an easy way to reassure oneself by finding the ISP responsible,- that is highly doubtful. Data exchange is taking place UDP targeted at port 80 (no ISP would dare to be blocking THAT).

It’s 99.99% that there’s something wrong with configuration of your router/NAT - the one that you own.

You might verify this by well. switching the device / hotspot / Internet provider just for a sec.

While traceroute might not be a good indication of the actual situation, the above reassembles a working link.

Let me explain, ‘Trace-route’ works in the ICMP protocol layer (which is encapsulated within IP protocol).

UDP, which is used by UDP works at the very same layer which renders it incompatible with UDP.

In other words, UDP != ICMP.

The vert fact that traceroute works does not, in any way ,indicate that your router would be passing UDP packets even if it does so with ICMP data-packets.

Further, some nodes on the data-path might choose not to discard ICMP inquiries at all which is why there’s a timeout on some of the nodes on your path, even though the data-packets clearly do get through.

Thus,

I bet you’ve got:

-

UDP communication not being routed on your router to your computer (enable UPnP - if available on router)

-

to verify this switch ISP for a sec, using same computer before you dig into this any deeper (there might be something ‘wrong’ with your computer, still).

Looking forward to hearing back from you!

Enable hotspot on your mobile device, connect to it, see what happens (and disable your old link).

Yea, can’t do that, I am working on a PC without a Wi-Fi card. Core was installed on fresh Win10. Windows defender is disabled for my LAN network connection, router has firewall not enabled. I still had to remove some windows port 80 listening process (no idea what it did, it was running frrm the start of new win10 install). After all that I managed to get Core web service to start (I got prompts that it is unable to start before). I have no UPnP on a router, but I can setup manual port forwarding. In the screen shown, port forwarding is turned on. If I remove it, there is no change in Core behavior. As you can see, I am in a bit of a pickle because I tried a lot of stuff to fix that. (Even disabling branch-caching with some haphazard registry modifications that caused my PC to die violently - hence fresh Win10).

You might want to verify your source of installation, as no native Windows Installation would occupy the most wanted port on Earth which is port 80, honestly.

That would be a paramount disruption for any windows administrator willing to deploy say any kind of a web-server and that simply is - unheard of.

Port 80 = web-server. That’s it. We know that and even Microsoft does.

I had that issue regarding port listening:

Exactly as pictured, right after fresh install from Microsoft Win10 iso. As of now, I am running Core, checked “netstat -ab” and it does show Core listening on port 80 and 443. I still get Bootstrap node failed tho.

Do note that BranchCache never is enabled by default and involves explicit customization (quite sophisticated involvement from an experienced network administrator) - say a customized Windows Installer.

Yes, I only meant the first out of all three. Still trying to figure out what can I check/change in setup to get it to bootstrap.

Just keep in mind that you may also launch a hotspot on your mobile device and share it via USB on most android devices just to narrow down the problem.

Do you mind telling me the model of your router?

High tech Huawei B535-232 (shit tier T-mobile router). I totally forgot about USB internet, gotta try it, thanks ![]()

anyway for you to verify whether your router has an IP version 4 assigned ? (not your computer behind it but the router itself)

Yea, I have my WAN address 100.94.79.137, but it is still within ISP NAT, so it is not the same as my external IP shown by WhatIsMyIP websites (Also IPV4).

Folks, assuming everything is fine on the workstation, the undesired behavior must be caused by the way NATs encountered on the data-path behave.

The question is - can we alter the way they function?

Neither UPnP nor explicit entries in NAT rules should be required.

That is because the router should accept data-packets coming from the endpoint described by the same [ENDPOINT, PORT] tuple it had FIRST dispatched datagrams to (ex. originating from our software), to be exact - if it had already created a 5 element tuple regarding {SRC_PORT, SRC_IP, DST_PORT,DST_IP, PROTOCOL} within of its cache.

Otherwise, router MUST drop these packets or assume these datagrams are destined for itself (if it’s not acting as a bridge / in a pass-through / DMZ mode).

Remember that routers do not care about layer 4+ (UDP/TCP) they care about layer 3 (IP) - for the most of it.

This particular case aside, @CodesInChaos you verify whether the new protocol-multiplexing mechanisms sends datagrams from same source port IN ALL CASES - requests, responses and for all currently multiplexed protocols - those over UDP.

@Alpacalypse you may try enabling DMZ/pass-through mode on your router/ disabling ‘firewall’ (on router). That would cause all datagrams being well… passed through.

BUT… you most certainly are in a double-NAT scenario, which wouldn’t help unless the prior routers are the ‘smart ones’ - if the source port changes during transmission … so @CodesInChaos - you verify what I’ve mentioned. I know there might be multiple sockets involved.

Thanks for the suggestions. Sadly my router does not support DMZ. My firewall on router is disabled as is windows defender. While Core is not connecting to IPv4(148.251.75.43) (Bootstrap node failed), I still can use the exact same IP to access Gridnet easily ![]() I will keep on digging, will try changing internet connection today to see if it was a router issue.

I will keep on digging, will try changing internet connection today to see if it was a router issue.

Cheers

EDIT: I am using mobile network now (same ISP tho, T-Mobile) and I get the same errors on Core, so it is not a router issue. Will look further.

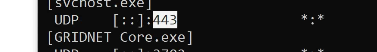

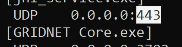

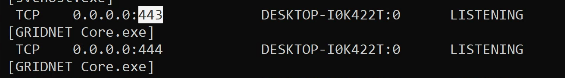

In GRIDNET OS, quite a few protocols are now being multiplexed over TCP and UDP all happening at port 443.

You may want to check if you can spot entries looking like the ones below (all need to be there):

- a socket bound to all local IPv6 interfaces [::] on port 443 UDP

-

socket bound to any local IPv4 interface port 443 UDP:

-

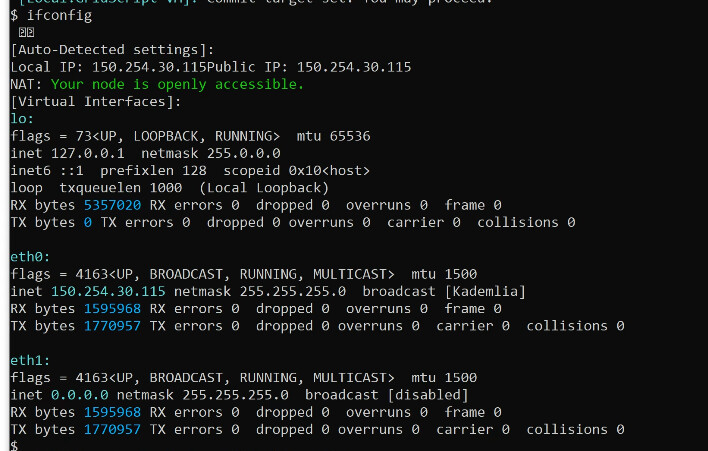

a socket bound to your public IP address or any other IP address which is assigned to the Eth0 Virtual Interface of GRIDNET OS. You may verify IP addresses assigned to GRIDNET OS virtual interfaces by using the

ifconfigcommand from the GRIDNET OS terminal, as shown below:

Then, you should be able to spot the below port bound as shown by netstat -ab (executed on Microsoft Windows):

![]()

You may use the GRIDNET OS ifconfig command to reassign IP addresses to GRIDNET OS Virtual Interfaces as needed. Note that a reboot (of GRIDNET Core) might be required.

- TCP Socket on any interface (0.0.0.0) port 443 - that’s for the internal web-server and port 444 for the anonymous web-proxy services (currently not multiplexed over port 443)

Finally, you may want to play around with https://www.wireshark.org/ and see if any UDP datagrams are making their way onto your computer., from any remote endpoints your instance of GRIDNET Core is trying to maintain connectivity with.

I went ahead and investigated our code-based (still pending but nothing suspicious spotted) so far.

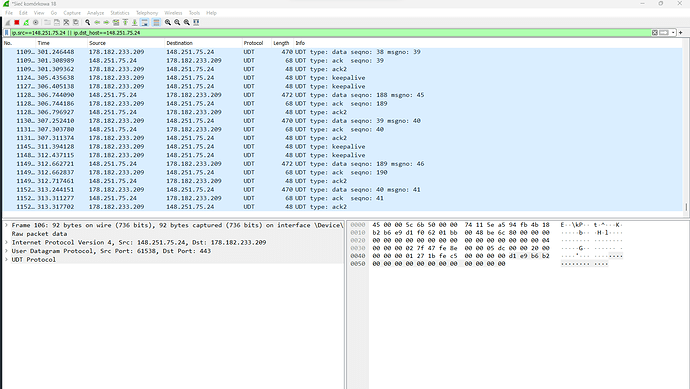

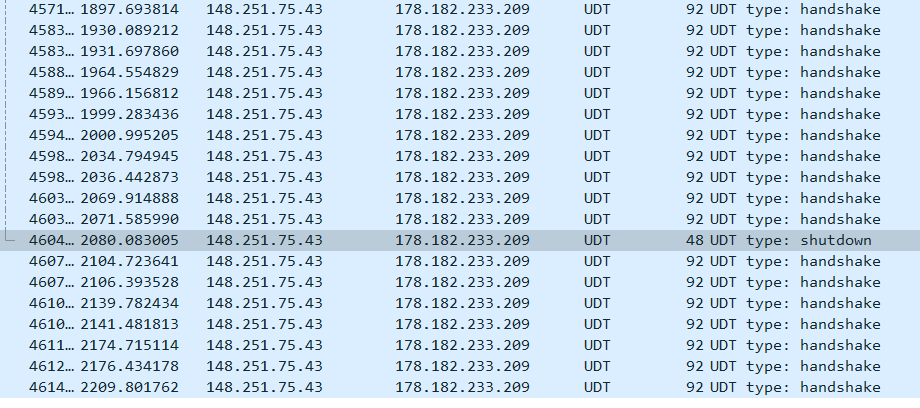

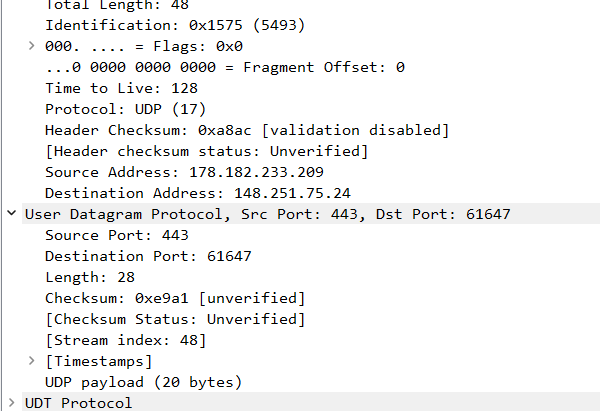

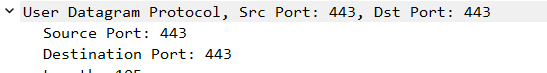

So I fired up Wireshark.

I’ve used the below filter to filter out IP datagrams coming in and out to one of the full-nodes GRIDNET Core was trying to maintain communication with:

ip.src==148.251.75.24 || ip.dst_host==148.251.75.24

Notice that Wireshark does recognize the UDT protocol (which is implemented over UDP).

- For outgress data:

We’re having data arriving from port 443 (remote node) to my local computer at port 61647.

I’m not behind NAT so probably the other node was the one who initiated a connection in the first place…

I’ll be looking more into this tomorrow…

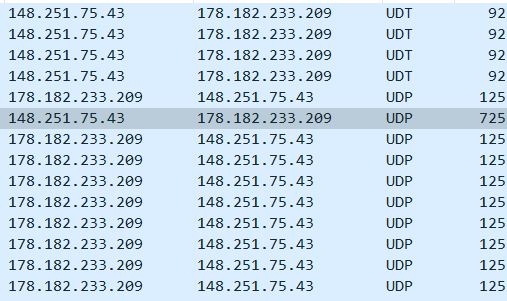

It’s interesting to note how wireshark depicts in detail all of the stages, i.e. when GRIDNET Core was killed (my local instance(, Wireshark kept showing remote nodes still attempting to reconnect with it (from constantly incremented source ports):

Notice the detect UDT connection stage (handshake/ shutdown etc.)

Below I attach a sample session with a sample bootstrap node (148.251.75.43) and my computer (178.182.233.209). One may spot data exchange over two protocols - UDT(implemented over UDP) and unsolicited datagrams over UDP.

Those unsolicited UDP datagrams comprise Kademlia-related data-exchange accounting thus for peers’ discovery.

initial discovery and data exchange.zip (18.5 KB)

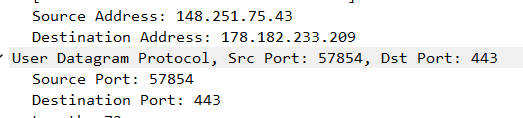

The bootstrap peer had my IP address already discovered so it was the one getting in touch with my computer in the first place (its port is 57854 while it attempts to connect with my node at port 443 as expected).

Packet No. 5669 below (the very first):

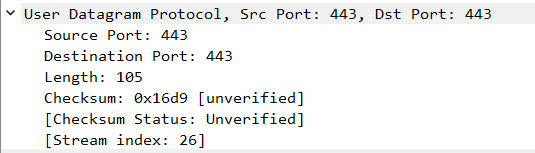

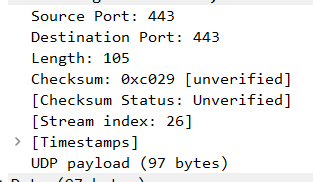

Starting at pocket No. 17785 Kademlia traffic begins:

Packet is sent from my port 443 to port 443 on the remote endpoint.

Frame No. 26176 (just two framed below, in the capture file):

Kadmlia-related outgress frame. We can see that the source and destination ports are the same. That is by design, to improve hole-punching properties and thus NAT traversal.

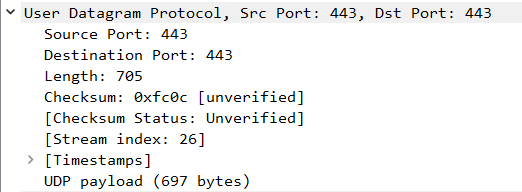

Those datagrams 697 bytes in length are responses from the Bootstrap node to Kademli -inquiries, I’ve verified this 100%:

If a node fails with peer-discoveries, and displays the typical “Bootstrap node failed” message in red, - these datagrams are most likely missing.

Now, the datagrams 97-bytes in length are the Kademlia inquiries sent from my node to the bootstrap node:

All that Kademlia-based data-exchange happens over UDP with both source and destination ports equal to 443, always - at each and every stage. For inquiries:

and for responses:

I have introduced some changes to the UDT library which may further improve its NAT-traversal properties.

In addition, it would now be obvious from which ports other nodes would be attempting to communicate from, allowing for dedicated, static NAT-routing entries for the incoming traffic (should hole-punching fail, -for whatever the reason).

Hole punching is simple,- node B sends a UDP datagram to node A, so that routers take note that such a communication pattern is intended, and so next time node A responds with another UDP packet - the datagram would be let through. Routers keep track of… sessions. Sessions, as with UDP there are no ‘connection’ but then… it’s all about naming conventions. UDT has connections and these are implemented atop of UDP.

All sane routers act in such a way.

For those less sane, the changes have introduced would allow for customized NAT entries - as UDP traffic would be originating from port 443 always (as is the case already with Kademlia).

I won’t be pushing these changes onto the test-net until we have verified that recent ( very extensive ) changes to the security sub-system and provisioning have not introduced security loopholes.

@Alpacalypse all this might not be caused by a NAT after all, since any - even lame router is expected to allow for traversal of data-traffic from same-port<=>same-port tuple, - after a ‘hole’ had been punched form within of a local network. It is your computer initiating the connection, after all.

Actually you may push changes from the main branch onto the test-net on nodes which do not have mining operations enabled, as these follow the others at best anyway…

I went ahead and did so. Anyway, seems like I wanted way too much from Microsoft Windows.

This cannot be done as per our current architecture, we’ve been already pushing the limits of what Windows allows for. I cannot introduce yet another layer of abstraction, cannot specify a custom source PORT for the UDT protocol, when using a separate socket since windows does not support ipi_spec_dst for pktinfo to be used by sendmsg thus seems like this CANNOT be done, on Microsoft Windows., without binding the socket and we cannot bind.

Now I might have a couple of tricks up my sleeve how to achieve this but it would take some time and I say the priority is low; we still haven’t got any evidence that this would be of any help (i.e. in this very case).