The addition of the Blockchain Explorer API available through the context command opened the door to explicit in-depth GRIDNET OS blockchain analysis.

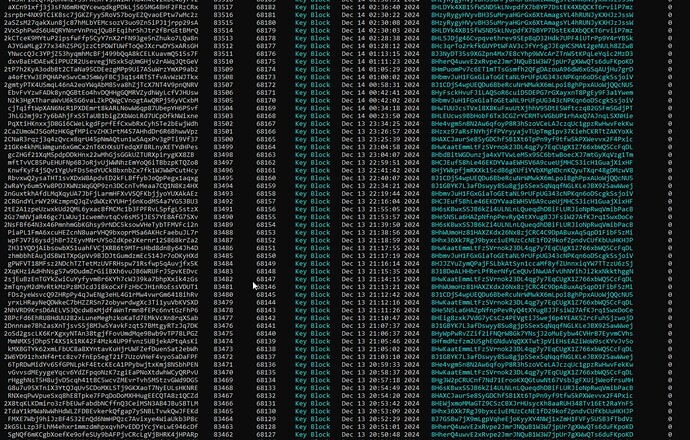

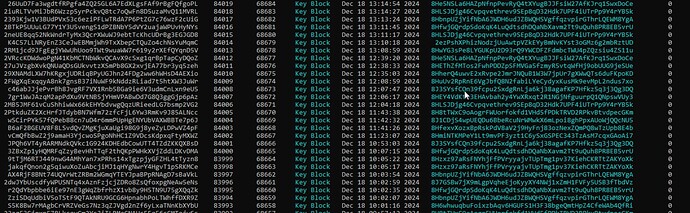

The below is sample output from context -c getRecentBlocks GridScript command:

Let us focus on Key-Blocks. These are blocks containing Proof of Work.

In any Proof-of-Work-based blockchain system, variations in block production speed are inevitable. At times, you’ll see multiple key blocks arrive in rapid succession, nearly stepping on each other’s heels. Then, just as suddenly, the network will seem to pause, as if catching its breath, with a longer gap before the next block appears. This pattern—bursts of fast finds followed by lulls—is not a fault, but rather a natural consequence of the probabilistic nature of mining combined with the lagging effects of difficulty adjustment mechanisms.

The recent table of blocks mined in the GRIDNET OS blockchain provides an excellent window into this phenomenon. In it, you can identify moments when several key blocks, those containing Proof-of-Work solutions, appear just a few seconds or minutes apart. For instance, consider a short stretch where key blocks are solved literally one after another, seemingly too quickly compared to the intended target interval. Soon after, the table reveals a considerable break—maybe half an hour, perhaps even longer—where not a single key block surfaces. Anyone unfamiliar with the intricate math behind Proof-of-Work might find this alarming, as if the network is oscillating wildly. But what’s actually happening is that the system is precisely doing what it was designed to do: providing a statistically random search for a solution under difficulty parameters that take time to adjust.

To understand why this occurs, let’s consider the underlying process. The difficulty adjustment algorithm in GRIDNET OS, ( which is described in detail over here ) is intricate, relying on Exponential Moving Averages (EMAs), Simple Moving Averages (SMAs), and smoothing factors to approximate ideal behavior. It aims to keep block intervals near a target time—just as Bitcoin and many other Proof-of-Work blockchains do—but it intentionally does so in a slow and measured way. The proof od work difficulty adjustment code is full of sampling windows, alpha values for smoothing, and step factors that ensure difficulty doesn’t thrash wildly with every incoming block. Instead, adjustments rely on a gentle, measured process, averaging the timestamps and difficulties of a sequence of past blocks.

This gradual approach is crucial. Difficulty adjustments cannot respond instantly to every fluctuation, because a blockchain’s security and trust depend on difficulty changes being slow and predictable. If difficulty swung dramatically after every single block, the system would be easy to manipulate. By using a window of blocks and smoothing the data, the algorithm ensures that temporary lucky streaks and unlucky droughts average out. Yet this smoothing inevitably means that the network sometimes lingers at a difficulty slightly too low or too high. When difficulty is marginally too low, miners may stumble into a series of lucky solves, producing several blocks almost back-to-back. The algorithm needs more data points to be sure this isn’t just a fluke before it cranks the difficulty back up. During this collection of data, we see that burst of rapid blocks. Conversely, once the difficulty eventually ratchets upward, it may become slightly too challenging, causing a stretch of time before the next block is found—a natural lull.

It’s instructive to compare this to Bitcoin. Bitcoin targets a 10-minute average block time, but anyone who watches the Bitcoin blockchain knows that you might see two blocks found within 30 seconds of each other, followed by an eerie ten or fifteen-minute silence. Despite a century of cumulative work going into every block interval (spread across a vast network of mining hardware), the system cannot guarantee a perfectly even tempo. Instead, it provides a statistical average. Over many blocks, things even out. The same is true for GRIDNET OS. By adopting a similar—though not identical—system of difficulty recalibration, the network embraces this natural, stochastic rhythm, ensuring that on average the target interval is met over the long haul.

The table of recent GRIDNET blocks confirms all of this. It shows that while you might have a cluster of key blocks at, say, Dec 18 13:14, 13:14:27, and 13:13:24 (just an example picked from the data, which might show three key blocks in an incredibly tight time window), you will also find subsequent periods, possibly at another date and time within the table, where no key block appears for a significantly longer interval. The end result: over the span of hours, days, and weeks, the overall average block interval hovers near the network’s intended target, fulfilling the protocol’s fundamental objective.

What might initially appear like wild swings in timing are in fact subtle waves of probability. The proof of work difficulty adjustment algorithm, with its carefully crafted functions, does not aim to react to every gust of wind in the data. Instead, it seeks to correct the course of difficulty on a gentle, predictable curve. When multiple quick blocks show up and the table’s timestamps come close together, the difficulty adjustment code doesn’t panic. It simply notes the event and waits to see if more data corroborates the need for a difficulty hike. By the time it applies that hike, the initial rush of easy blocks may have ended, but now difficulty will be slightly higher, and maybe the network will see a slower block or two before returning to normal.

This behavior is a sign that the system is working as intended, just as it does in Bitcoin. Our system is defended by the very same logic: it is normal, it is expected, and it is the sign of a healthy, probabilistic, and difficulty-adjusting Proof-of-Work blockchain. Over long timeframes, everything averages out, ensuring that while the dance of short bursts and pauses continues, the music itself—those carefully targeted block intervals—keeps a steady, if probabilistically flexible, beat.